MOV, WMV, AVI, MPEG, MP3, OGG, WMA, … Media Formats Explained

Like my “Top 13 things not to do” article, this page is mainly intended to channel my irritation caused by things that bother me on the internet. The topic is (multi)media formats and the widespread lack of knowledge thereabout. Many people do not know the difference between a container format and a codec. Others have some—mostly unjustified—hatred against certain media formats and try to justify this hatred with pseudo-knowledge when bashing those formats on forums and newsgroups. This hatred is often rooted in ignorance. Instead of descending to the same level as those people and insulting them on the same forums, here I try to give a shot at education.

Summary

Because this text is quite long, here's a summary.

A media file, like one having a file extension of MOV, MKV, AVI, or WMV, is a container file. If you're talking about a ‘format,’ you are actually talking about the container format. A container file specifies how the data streams inside it are organised, but does not say anything about how the actual data is represented. This is the task of the codecs. A codec describes how video or audio data is to be compressed for storage and decompressed for playback. Theoretically, one could use any codec inside any container format. However, codecs are traditionally licensed exclusively to a certain format. For instance, the Sorenson codec used to be only found in QuickTime files and WMV video is only used in Windows Media files.

Many people don't know the difference between formats and codecs, hence think that

all QuickTime files use the infamous Sorenson codec and are a pain to play. Or, they mistakenly think that AVI files will always be good quality because they would supposedly use the DIVX codec.

There is no ‘ideal’ format for all situations. Some formats are easier to use on certain platforms, some are nearly impossible to use. If you need to embed movies in your website, the best way to deal with this problem is to give the user a choice between several different formats, or just stick to the HTML5 standard when it has finally settled on a final decision for the video specification.

What is a container format?

The most common mistake people make, is confusing container formats with codecs. Many people used to hate QuickTime, but in fact what they were actually hating was the Sorenson codec, even though they didn't even know what that is. Other people think AVI is a great format because “it delivers good quality.” I can easily make you an AVI whose quality will make you puke. And still other people wonder why one AVI or MOV plays fine on their computer while another one gives no image or sound.

When you download or stream an AVI, MOV, or WMV movie, you're downloading a container file. As the name says, it is a file which contains something else. In the specifications for MOV or AVI files, you will find nothing about how to represent, compress or decompress actual video frames or audio samples. The only thing these files do, is providing a wrapper around data streams. When talking about a ‘format,’ you are talking about the structure of a container file. So saying that the AVI format has good video compression

is nonsense, because the format has nothing to do with how the video is compressed.

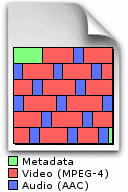

Inside those container files, there are one or more data streams. The most common files have one video stream and one audio stream, but in more advanced formats like QuickTime or Matroska, there can be any amount and type of streams. Any container that is designed for streaming (either from a sequential medium like a DVD, or the internet) will interleave the streams in chunks. This ensures that data that belongs together temporally, for instance audio chunks that belong with certain video frames, can also be read from approximately the same location inside the file, and will arrive together when streamed. Again, the format specifications only say how these streams are to be included in the file, not what's in the streams themselves. This is where the codecs come into play.

In almost all cases, video and audio data will be compressed, which means it will be stored in a way that takes less space than if the data would simply be represented in raw form. The compression scheme will typically be specified by the codec and is often an integral part of it. A simple codec could take a raw stream of data and send it through a compression scheme like ZIP, but most practical codecs will try to do something more advanced. Compression schemes are divided into two categories: a codec that preserves the original data with perfect accuracy is called a lossless codec, a codec that is unable to perfectly reconstruct the original data is called a lossy codec. Lossy codecs are designed such that they degrade the signal in a way that is minimally visible or audible. They do this by throwing away data that is the least noticeable according to a so-called psychoacoustic or psychovisual model.

Below is a short overview of how container formats evolved over time.

What is a codec?

Remember that a container file is nothing more than a wrapper around a bunch of data streams. The structure of the video and audio data inside those streams depends on which codec was used to encode them. Codec stands for coder/decoder. Theoretically, one could just take raw video and dump it into a file. This however would make the file monstrously huge, hence the need for encoding.

A video codec describes how to convert moving images into a stream of bits that can be stored in a digital file, or vice versa. For the simplest codecs these conversions are straightforward, but the gain w.r.t. raw video is small. For the more advanced ones the conversion can be extremely complicated, yielding a much better compression. For instance the video codecs in the MPEG-4 group will try to represent video frames as an intricate puzzle of tiny blocks that refer to similar-looking blocks at different locations in either the same or previous and following frames, with some extra data attached that describes the smaller differences between each block and its references. All this is then represented in such a way that it requires as little memory as possible.

Something similar holds for sound although as opposed to uncompressed video, uncompressed audio is still somewhat tractable. In the early days of QuickTime and AVI, movies would actually use uncompressed audio, but the sampling rate and number of bits per sample were low to keep the file size reasonable. This gave the sound a crunchy and dull quality. The movies from back then needed to be very short regardless, due to the poor efficiency of both this raw audio stream as well as of the contemporary video codecs.

The kind of approach as used for video doesn't work very well for audio, hence the most advanced lossy audio codecs work in a different way. They usually chop up the sound into short segments whose frequency components are determined. Then a representation is made that tries to describe the frequency content in a minimal way, such that a human will not hear the distortion caused by the discarded information.

Below is a short overview of both the first video codecs as well as audio codecs and how they evolved.

The reason why people often confuse codecs with formats is that traditionally codecs are linked with formats. The Sorenson codec used to be licensed exclusively to Apple, hence Sorenson was only to be found in MOV files. The original DivX codec was a hacked Microsoft MPEG-4 codec used in the first pirated AVI movies, hence DivX is often confused with AVI. And with the name correction of the non-standard AVI into DIVX, it is clear that a file with a ‘divx’ extension is only intended to use the DivX codec.

However, MOV supports a myriad of other codecs next to Sorenson, and the same goes for MKV, AVI, and DIVX. Technically spoken there's no reason why you couldn't use a WMV stream inside an AVI or MOV file, or a Sorenson stream in a WMV file. The only barriers against this are potential lack of support in the format for advanced features of the codec, and legal barriers. MOV files actually exist with DivX video streams inside them. Having an MP3 audio track in a MOV has become quite common, even though the standard QuickTime software does not give you the option to use this codec for audio. Even though the original AVI specification didn't allow to use the DivX or MP3 codec, the amount of AVI files with these codecs is now to be counted in the millions.

The tendency towards hard-linking a format with a (set of) codec(s) is both good and bad. The good is that one immediately knows what codec such a file uses, the bad is that you're stuck with that codec. It is less confusing for the average user but more frustrating for the more professional users. That's why QuickTime is a popular format for editing video: it allows any codec to be used. A single MOV file can even contain multiple streams with different codecs, at different time offsets inside the file.

What does ‘bitrate’ mean?

In the context of digital video and audio, you will often encounter the term bitrate, but what does this word really mean? Bit rate, or bitrate as I refer to it here, is strictly spoken the number of bits per second that are used to represent a video or audio stream on disk or when streaming it over a network. Because of the large size of video streams, video bitrate will often be measured in megabits per second, audio typically in kilobits per second. Because there are 8 bits in one byte, you have to divide the bitrate by 8 to end up with a value of bytes per second.

Since bitrate is defined as a size figure per time unit, one needs to specify the time span for the measurement. This will vary depending on the situation. For instance one could just define the average bitrate across the whole duration of a video, but when it comes to ensuring compatibility with hardware limitations, the bitrate is measured continuously across a moving time window that may be even shorter than a second.

For raw uncompressed video, there is a predictable relation between bitrate and the size of the video frames and number of frames per second. The bitrate is dictated by those parameters and there is no way to vary the bitrate independently from them.

With the introduction of lossy codecs however, things became less predictable. The idea of a lossy codec is to spend as few bits as possible on representing the video or audio stream, while keeping the encoded material as indistinguishable from the original as possible. The cut-off point where the codec starts throwing away information, is configurable by whomever encodes the video. Files can be made smaller by changing the quality settings, but the more information is thrown away, the higher the risk of visible or audible degradation of the material (so-called compression artefacts). The earliest lossy codecs still had a fixed reduction ratio in bitrate compared to uncompressed material, but later on they became more advanced and started throwing away only information that is deemed invisible or inaudible according to some configurable threshold. In the lists of video and audio codecs below, I give some short hints at how successive codecs became better at this.

What this means is that with modern codecs, the bitrate required to represent a video file at good quality, depends highly on the content of the video. I can easily illustrate this with two simple static images encoded as JPEG files:

Both these images have the exact same dimensions and are encoded with the exact same JPEG quality parameters. Yet, the first image only takes 1.3 kBytes while the second one takes 15.3 kBytes. The reason is that the first image only contains smooth low-frequency features, while the second one is almost pure noise. In information theory terms, the second image has a higher entropy or information content than the first one. To represent it with the same accuracy, more information is needed.

I can shrink the file size of the second image to match the smooth image, by lowering the JPEG quality level. To do this, I had to go down to a level that nobody would ever want to use in practice. As shown above, the result is very visibly distorted. To give you an idea what a normal image looks like at that level, I have also included a fragment of one of the above illustrations, compressed at that same quality level.

This is what happens when enforcing too low a bitrate: the result looks awful. The encoding scheme of video codecs is much more complicated than the one for JPEG, but similar principles do hold.

Traditionally, one would choose a bitrate catered to technical limitations or even just a wet-finger guess, and enforce this while encoding. The codec would then attempt to stick to this bitrate by varying the quality per encoded frame. This way of working only makes sense if there is a reason to limit the bitrate. If there is no hard limit and consistent quality is important, do not rely on a fixed bitrate but set a quality level in the encoding software instead, and let it vary the bitrate across the video to maintain visual consistency. When the video is to be streamed over a limited bandwidth channel, a maximum bitrate still needs to be imposed, as well as other constraints like buffer sizes. In a codec like H.264, this is typically done by limiting the encoder to a certain level like 4.1.

With audio there is still a strong tradition to stick to fixed-bitrate encoding, although many codecs do support variable bitrate. Because audio requires much less space than video, it is just easier to know beforehand how much space needs to be allocated to it, even when large parts of it are known to be pure silence which would not take up any space at all when efficiently encoded.

If you need to perform bitrate calculations for video files, I have made a specific calculator for that.

Which format/codec should I use for my web movies?

This is a rather nasty question although the situation is improving. The HTML5 standard includes a video tag and the goal is that this tag is linked to a fixed container format with fixed codecs, such that any HTML5 compliant web browser is guaranteed to be able to render any video tag in any HTML5 website. This looks very promising, but at this time HTML5 video is still problematic because no true consensus has yet been achieved over the container format. There are two contenders: H.264 (in an MPEG-4 container) and WebM, and at the time of this writing, it is unclear which of the two formats will eventually emerge as the single true HTML5 video standard. Safest is to simply provide your videos in both formats. If you're tight on server resources and can only afford a single format, I would aim for the H.264 file because my impression is that it will eventually win the contest. In the long term I expect HTML5 video to evolve towards H.265, but don't take my word for it.

If you think the lack of a true standard described above is cumbersome, it used to be much worse. In the old days, the only way to embed videos in a website was through browser plug-ins. Before the advent of plug-ins, the only option was to offer downloadable video files in one or more formats and codecs that would hopefully be playable by the majority of visitors. As for plug-ins, first there was QuickTime, then VfW (WMV), and then came Flash (originally from Macromedia, later on bought by Adobe). Flash made things much simpler. The popularity of the former Google Video, YouTube in its early days, and similar websites, was partly due to the ease of playing Flash-based videos if a working Flash browser plug-in was installed. Flash however had serious drawbacks, one of which was that Apple never supported it on its mobile devices. This gradually led to Flash being discontinued at the end of 2020, in the sense that not only did Adobe stop developing it, the browser plug-in even refused to continue working at all. Browser plug-ins in general are now becoming a thing of the past. The idea for HTML5 is to include all the necessary frameworks for building rich media websites into the standard itself, and each browser must then offer its own implementation of the standard.

If for some reason you want to offer a download link for a video, you are less constrained than what the HTML5 standard dictates. In this case I would use an MP4 container with H.264 video in it. This is likely to be playable on any recent computer, mobile device, or media player.

A short history of container formats and codecs

The first edition of this article was released in 2006 and initially I tried to keep it up-to-date with the latest evolutions in digital media. This became more difficult over time, which is why I gave up on trying to capture every detail of every new development. The following overviews have therefore become more of a history of the earlier formats and codecs. For up-to-date information about the latest and greatest, you should look elsewhere.

Container formats

- QuickTime (.MOV, .QT): this was the first real successful multimedia format, introduced by Apple Computer, dating back to 1991. The first versions were only available for Mac OS but a Windows version soon followed. From the start on, QuickTime was quite advanced with support for any amount of streams and multi-segmented files. Editing a movie is simple and intuitive. Later versions even allowed scripting and interactive features. I remember viewing (albeit very short) movies of Apollo launches and a heap of camel bones fusing into a skeleton, while on a PC the most exciting thing one could do was watching an animated GIF.

- Video for Windows (.AVI): of course Microsoft had to make a clone(1a) of QuickTime as soon as possible, and they managed to do it within one year. Maybe this is why the original VfW specification was such a braindead format. It didn't have most of the advanced features QuickTime has. The format was extended by the OpenDML group to tackle some limitations of the original specification. Later on, MS dropped support for AVI in favour of their new WMV format. One reason was that VfW was not a big success. The main reason however was probably because AVI had become the format of choice for pirated movies — maybe due to the link between the simplicity of the format and the simplicity of the average teenage script-kiddie pirate. Arrrr. Technically however, those movies are not AVI files because Microsoft's VfW specification doesn't allow the use of the typical pirate codecs.

- DivX (.DIVX): at the time of this writing, this format is totally identical to AVI, at least the kind of unofficial AVI with the non-standard codecs. This is also the reason why the company which produces the (now legal) DivX codec has changed the file extension. Although Microsoft abandoned AVI, they still don't like it to be associated with pirated movies. Mind that due to this name change there's now a DivX format and a DivX codec.

- RealVideo (.RM): this was one of the first more successful competitors for QuickTime, although it never really became very popular either, probably due to the fact that initially it could only be played in the extremely proprietary RealPlayer. There is also an audio-only variant called RealAudio (.RA, .RAM).

- Windows Media (.WMV, .WMA, .ASF, .ASX): Microsoft's second attempt to dethrone QuickTime was more successful. It was just a matter of timing and abusing their 95% market share for the umpteenth time. Contrary to the time when VfW was introduced, now the average person actually started to use video on their PC. Windows Media being bundled with Windows, it really didn't matter how good or bad it was. Being preinstalled gave it a huge edge over QuickTime, which had to be installed by the user. The MS monopoly did the rest.

- MPEG-1 (.MPG, .MPEG, .MPE): this is a format designed by the ISO, or International Organisation for Standardisation. It is about as braindead as AVI, but was much more popular due to the fact that it is an ISO standard, and was inherently linked to a compression scheme which was really good at that time. The format was, and is still used in Video CD's (VCD). The nice thing about this format is that it's an open standard. This means that playback is supported pretty much everywhere, although you need to pay a licence to make an encoder.

- MPEG-2 (.VOB): this is an update of the MPEG-1 format, and is also an ISO standard. It is the format used for DVD movies. It is also inherently linked with certain compression schemes which allow better quality than the ones from MPEG-1.

- MPEG-4 (.MP4): another instalment in the MPEG series, offering much advanced features over the previous. Because it was supposed to offer similar features as QuickTime, MPEG-4 is in fact a fusion of QuickTime and the older MPEG standards. Apple has decoupled QuickTime from the .MOV format and made .MP4 the default format for the QuickTime 7 platform.

- Audio Interchange Format File (.AIFF, .AIF) and Windows WAVE audio (.WAV): as the names say, these formats can only handle sound. I take them together because they're pretty much equivalent and are subsets of the more general IFF format. AIFF is popular on Mac OS and some flavours of UNIX, WAV in Windows. Traditionally, an AIF or WAV holds simple uncompressed audio. But the format specification actually allows to use any codec, so you can for instance have a WAV or AIF with MP3 audio.

- Ogg (.ogg): this is an open-source format, which is only supposed to contain Vorbis or FLAC audio and Theora video streams. Vorbis was designed as a royalty-free alternative to the MP3 and WMA codecs which require a licence to be used. On top of that, it offers a superior quality compared to those codecs. Theora is the same for video.

- Matroska (.mkv): an open-source container format that was partially motivated by the need to have much more flexibility than the ageing AVI format offered. For instance multiple sound and subtitle tracks can be contained in the same MKV file. In its early days, Matroska was only popular within certain circles like underground releases of Anime series and HD-DVD/BluRay rips, although there is no specific reason why it would be the most suited for that type of content. Nowadays the format is more widespread and is supported by the majority of media players.

- Flash Video (.flv): like the name says, it is the container format used by the now defunct Adobe Flash plug-in. Until the advent of HTML5 video, when you viewed videos in YouTube and other Flash-based video websites, your browser actually downloaded an FLV file in the background and fed it to the Flash player. The FLV container format has gained increasing support by other standalone players. The format itself is an open standard, but some of the codecs contained inside the files are proprietary. Now that Flash is obsolete however, the relevance of this format will decline.

- WebM (.webm): this is actually a restricted variant of the Matroska container, only allowing the VP8 video and Vorbis audio codecs. The format is intended to become the standard for HTML5 video. However, at this time there is a battle between proponents of this format (Google amidst others) and proponents of H.264 (in an MPEG-4 container). Either one of these formats will need to emerge as the winner in order for HTML5 video to become a truly practical standard.

Video codecs

Video codecs have come a long way. Between raw uncompressed video, the only thing available in the earliest days of digital video, and the latest most advanced codecs, there is easily a factor of 300 or even better when it comes to the reduction in storage space needed to represent video at a quality level with no obvious visual degradation. The following list in roughly historical ordering gives a bit of a hint of how this is possible.

- Cinepak: now utterly obsolete, but in the early days of internet video this was the most popular codec. It used to be a standard codec in both QuickTime and VfW. The codec worked by splitting up each video frame in a grid of small squares. If in the next frame a square was almost identical to the same square in the previous frame, it was omitted. Otherwise a new square was stored, which was compressed on its own. Compared to raw video, this gave a huge reduction in size.

- Motion-JPEG: this is an adaptation for video of the JPEG compression scheme (used for still photographs). Actually MJPEG just encodes each frame with a variation of JPEG that is optimised for speed, without trying to exploit relations between similar frames. The advantage is that each frame can be accessed without having to reconstruct previous or future frames, for this reason this codec is still used when straightforward editing is desirable, e.g., on portable cameras.

- MPEG-1: this codec is actually a group of codecs, meant to be used in MPEG-1 media files. In the case of MPEG-1, it is justified to interchange the format and the codec, because they are mostly inseparable. This codec builds upon the same idea as Cinepak, but adds the concept of motion estimation. This means that each of the tiny blocks can now also move around between consecutive frames. So-called P-frames can be described, or predicted, by referring to parts of the previous or same frame at any position (in practice, the range of movement is limited). It is also possible to use both preceding and future frames for prediction (so-called B-frames, for ‘bidirectional’). This enables an even huger reduction in file size compared to Cinepak.

- MPEG-2: technically, there is not much difference with MPEG-1, except for some small improvements. While MPEG-1 was mostly meant to be the digital equivalent of VHS video, MPEG-2 is intended for higher quality digital video, more specifically DVD. The specification allows for larger video resolutions and higher bitrates. This codec requires licensing for commercial applications.

- MPEG-4: you may wonder:

what happened to MPEG-3?

Well, it never existed. The MPEG numbering scheme is a story on its own, for instance after MPEG-4 comes MPEG-7. Anyhow, MPEG-4 is not really a codec but rather a specification for a group of codecs. This means that one can make multiple different MPEG-4 compliant codecs. The nice thing is that if a codec is MPEG-4 compliant, it can in theory be played with any MPEG-4 compliant player. The MPEG-4 standard consists of multiple parts and Part 2 describes the first MPEG-4 video codec, which is therefore the one that is often simply calledthe MPEG-4 codec.

The basis is the same as MPEG-1 but with advanced features which allow another leap in compression performance. For instance, instead of simply linking image parts to different positions in previous frames, small differences between those parts can be modelled and encoded as well if not too large. This allows much more efficient modelling of colour/intensity changes or minor differences between frames. The standard also allows predicting a frame from multiple reference frames, and multiple consecutive B-frames instead of only one. Microsoft was one of the first to make a so-called MPEG-4 codec, but as usual they managed to make it non-standard in some way. - Intel Indeo Video: this is a proprietary codec from Intel. It is a bit like a predecessor of Sorenson, with the difference that it isn't licensed exclusively to a single format: Intel made the codec available for both VfW and QuickTime. Unfortunately, the QuickTime codecs were not ported to the new OS X version of QuickTime. On the technical side, I cannot tell how Indeo works because it is proprietary. I assume it has similarities with MPEG-1. There were multiple versions with increasing performance but this codec is now obsolete.

- Sorenson Video: this is the infamous codec I've been talking about. It was originally licensed exclusively to Apple and later on also to Adobe, so you'll only find it in QuickTime and Flash movies. The upside of this codec is that it had quite good performance at the time it was popular. There were several versions, with each version improving upon the previous. During a long time this codec was used for all the movie trailers available on the Apple site. (Luckily, these trailers moved on to use the standard MPEG-4 codec or H.264.) Again, due to it being proprietary, I can't tell you anything about how it works. Judging from the compression quality and artefacts on low-bitrate video, it seems to be related to MPEG-1, with later versions moving towards MPEG-4.

- WMV: like the name says, this is the codec which accompanies the Windows Media format. It is as proprietary as it can be, so don't ask me how it works. Because it came shortly after Microsoft's non-standard MPEG-4, I assume they started from the same basis. There are several versions with slight improvements in each update. However, the main reason why MS used to push this format, is that they have absolute control over it. It features DRM, which officially means Digital Rights Management but from a user's point of view it might as well mean Digital Restrictions Management.

- DivX: the first version of DivX (DivX 3) actually was nothing more than a hacked version of the MS MPEG-4 codec. This codec started the proliferation of pirated movies, because most movies could now fit onto one CDR with reasonable image quality. The pirates hijacked the AVI format for this purpose. For audio, another hacked codec was used: either MP3 or WMA. Some of the early pirated AVI movies even used WMV for video. With version 4, the developers of DivX re-wrote the codec from scratch to make it legal and improve it. More versions with better performance followed, and from version 6 on, DivX decided to rename the ‘pirated’ AVI format to DIVX.

- XviD: yes, it is simply DivX spelled backwards. This codec was an attempt by the open source community to create a legal clone of the DivX 3 codec. It was developed independently from the DivX 4 codec, but since both codecs are MPEG-4 compliant, the resulting video streams are mostly compatible. After a few versions, the XviD codec equalled or even surpassed the quality of the DivX codec, but it gradually became obsolete since the advent of H.264.

- H.264: also known as AVC (Advanced Video Coding), this codec was developed by the same groups as MPEG-4 and therefore has been included in the standard (more specifically, it is part 10 of the standard). It has been designed to give excellent performance even under low bitrate situations, and it achieves this goal. Some of the features that enable this are an in-loop de-blocking filter and a much larger flexibility in what data can be used to predict other data. The higher efficiency made it a suitable codec for use in BluRay discs. At the time of this writing it is one of the best performing codecs. Unfortunately this performance comes at the price of increased processing power requirements, which is usually tackled by dedicated decoding hardware. Also, even though this is a standardised codec, licensing is required for commercial applications. The popularity of the open-source library called x264 has caused many people to incorrectly think there is an “x264 codec,” while x264 is just an implementation of the H.264 codec.

- VC-1: a codec primarily developed by Microsoft and originally tightly tied to WMV, but it has become more open and is one of the codecs supported by BluRay. It is often considered an alternative to H.264, it has a similar licensing scheme and offers comparable performance, with an advantage in low-latency situations. It is mostly vanishing however into the shadows of H.264 and H.265.

- H.265: as you may have guessed, this is the successor to H.264. It is also known as HEVC (High Efficiency Video Coding). The improvements aren't dramatic but good enough that this codec is considerably more efficient and more suitable for ultra high definition streams (4K and 8K). It also allows for higher bit depths (number of bits used to represent colour intensities), which helps with accurately representing HDR (high dynamic range) video.

Audio codecs

Audio compression does not have the advantage that the data consists of very repetitive structures in 2 or even 3 dimensions, which makes it harder to compress. The best compression schemes actually exploit limitations of the human auditory system to discard information in a way that is usually not noticeable. For the best codecs currently available, this has led to a reduction in storage requirements of almost a factor 10, a far cry from the factor 300 achieved in video compression but still a lot better than nothing.

- PCM (Pulse-code modulation): this is not really worthy of the name ‘codec’, because it is in fact uncompressed audio. The stored data is a list of consecutive sound pressure measurements. It was the only option in the early days, and the only way to save space was to use lower sampling rates or fewer bits per sample, resulting in a dull and/or noisy or ‘crunchy’ sound.

- A-law and Mu-law: these are not really codecs either, but alternative ways to sample audio than the regular volume intervals used by PCM. A logarithmic scale is used, which effectively gives more dynamic range. In PCM, more silent sounds have more noise, while with A- or Mu-law the noise is more equally distributed over a large range of volumes.

- IMA/ADPCM: this was one of the first codecs that delivered a 1:4 compression ratio for 16-bit audio, with less quality loss than simple downsampling. It was used both in QuickTime and VfW, called IMA in the former and ADPCM in the latter. The codec was still primitive though, and an audible amount of noise was introduced in the sound. What it basically did, was adapting the quantisation step size on-the-fly depending on the local maximum amplitude. This provided a more accurate representation of silent sounds, but also a more coarse representation of loud sounds, in other words an overall more uniform noise level.

- QDesign Music and Qualcomm PureVoice: these are two proprietary QuickTime codecs which were quite popular in the days they were introduced. The QDesign codec has similarities to MP3 but is more primitive, and the Qualcomm codec is designed to compress speech.

- MPEG-1 audio (MP1, MP2, MP3): more correctly, the three flavours of this codec are called MPEG-1 Layer I, II and III. Complexity and low-bitrate performance increases with increasing layer number. Layer I is a simplified version of II, Layer III is quite different.

The Layer III codec is by far the most popular (not just of these three, of all codecs) and is more commonly known as MP3. Like DivX/MPEG-4, this popularity finds its roots in piracy: MP3 made widespread copying of music over the internet possible. Strictly spoken, anyone who makes an MP3 encoder needs to pay a licence fee to the Fraunhofer institute. Worse, using MP3 for a commercial streaming application also requires a licence.

These codecs work so well because they use a so-called psychoacoustic model, a model of the human auditory system, to throw away inaudible information. The compression ratio can be chosen; a popular bitrate is 128kbps which results in a ratio of 1:11. It has long been a myth that it results in sound quality indistinguishable from a CD, and many people still believe in this myth. The truth is that on any decent sound system and with well-functioning ears, 128kbps MP3 sounds like manure. A bitrate of 192kbps or higher is more realistic for near-CD quality. (In case you were wondering: transcoding a 128kbps file into 192 kbps will not improve its quality, on the contrary.) Moreover there is a complication: the standard only describes the MP3 decoding in detail, not the encoding. This means that there are infinite ways to implement an MP3 encoder, which is why quality between MP3 files of the same bitrate can greatly differ. There are some very good MP3 encoders out there, like LAME, and also some truly crappy ones. Mind that despite its higher complexity and better performance at low bitrates, MP3 has some inherent limitations that prevent it from accurately reproducing certain sounds even at its highest possible bitrate. Starting at 256kbps, Layer II actually beats MP3 in this regard.

MP3 is one of the rare cases where it is common to simply store the encoded data stream as-is in a file, without wrapping it in a container format. The typical ‘MP3 file’ will at best contain a few headers in the de facto standard ID3 format to indicate artist, title, etc, but the stream itself contains enough information to be readily decoded. - AC3: this is one of the codecs used for audio on DVD and BluRay. It is designed to support multiple channels, which makes it suitable to encode surround sound. It is related to MP3 because it also uses frequency information to discard inaudible information, but is more primitive. It is typically used at bitrates of 384 or 448kbit on DVD, and 640kbit on BluRay.

- DTS: another codec that is specifically designed for surround sound on DVDs and BluRays. It uses a less complicated compression scheme than AC3 and therefore requires a much higher bitrate: about 1.5 Mbit (similar to uncompressed stereo CD audio). At this bitrate DTS is generally regarded as being indistinguishable from the uncompressed source. It is possible to encode 5.1 DTS at 768kbps but in my experience this results in worse quality than 384kbps AC3.

- AAC: this is intended to be the successor to both MP3 and AC3 and is the standard audio codec for MPEG-4 media (to be precise, it belongs with Part 3 of the MPEG-4 standard). Quality is slightly better than MP3. It is also the codec used in the iTunes music store, initially augmented with a DRM scheme until Apple decided to switch the entire store to the DRM-free “iTunes Plus” in 2009. Although a licence is required to manufacture an AAC codec, unlike MP3 no licence is required to use the codec for streaming of any kind.

A variant called ‘HE-AAC’ is available that uses a trick called “spectral band replication” to improve quality at low bitrates. The trick is to encode the sound at a lower sampling rate, and reconstruct the discarded high-frequency content during playback by making an educated guess based on the spectrum of the lower frequency bands. This works amazingly well to provide audio that does not sound obviously degraded, but differences would be noticeable when directly comparing to the uncompressed original. - WMA: the audio codec of Windows Media. Judging from sound quality, it is very strongly related to MP3 although newer versions are slightly better. How much better is to be guessed because it is proprietary. This format was often used in music stores other than Apple's, and of course used DRM in such cases.

- Ogg Vorbis: this is an open source alternative to MP3, developed by Xiph. It is designed to be completely patent- and royalty-free (although the companies behind MP3 and AAC want you to believe otherwise). Moreover the performance is really good, from low to high bitrates. This codec focuses on quality-based encoding, which means the bitrate is automatically adjusted to ensure constant quality. For most applications which do not involve real-time streaming, this makes a lot more sense than the fixed-bitrate that is the default for most other codecs. Of course this codec also supports a fixed-bitrate mode.

- Speex: another Xiph codec that was designed specifically for encoding speech at very low bitrates.

- Opus: this is the successor of both Ogg Vorbis as well as Speex, combining the qualities of both and automatically using the most appropriate algorithm. It offers excellent quality even at low bitrates.

History: media formats vs. players

Next to confusing a container format with a codec, another misconception that used to be prevalent, was the confusing of a media format with a media player. This confusion luckily has become less commonplace since the marketplace for digital media lost a lot of its monopolistic nature and video playback is now a standard feature of any mobile device. Traditionally a media format was only playable in its accompanying player, like the QuickTime Player for MOV, Windows Media Player for AVI and WMV, or RealPlayer for RA and RM. Back in those days this made sense, because in the beginning there was no need for other players: there was only QT on Mac OS, and AVI on Windows. However, there is no technical reason why today a certain player couldn't open and play a different format than its native format. Again, the only barriers against this are legal. Many of these barriers have broken down thanks to the proliferation of media formats on the internet. For instance after a few versions QuickTime Player could also play standard AVI files, and the Mac OS version could even play WMV files by installing a plug-in. Most players can play MPG files and virtually any player can play WAV files. VLC and MPlayer are open-source players which intend to support as many formats as possible. They can play MOV, AVI, WMV, RM and many other formats, and support a large number of codecs. It is this kind of convenience that has caused players like these to have mostly displaced the native players like QuickTime or Windows Media Player.

There are only two obstacles that can prevent a player from playing a certain file: the codecs or DRM. Codecs are the reason why one AVI or MOV file plays fine in your copy of MPlayer while other files with the same extensions don't: if your player doesn't support the codec used in the file, it won't play. As you know, WMV is a proprietary codec, which is why playing WMV files in anything else than Windows Media Player often used to be a pain in the ass. Detailed specifications required to implement your own complete WMV decoder were not publicly available. One had to either pay a licence fee to Microsoft or resort to tactics like reverse engineering to be able to play WMV outside of the Windows framework. A more devious tactic was to tap into copied Windows Media software libraries, which was a legally very dubious practice. This means that any variants of WMV that have not been reverse engineered yet, cannot be played on a different platform than the ones for which WM libraries are available.

The same goes for QuickTime's Sorenson codec. I'm not sure if all its variants have been reverse engineered yet, or whether the rights and specifications have been released to the public, and to be honest nobody probably cares because this codec has been largely displaced by H.264 and its successors.

DRM (Digital Rights or Restrictions Management, depending on whether you're a lawyer or user) is the other reason why you can only play certain files in a certain player. As far as I know, Windows Media is the only media format that is engineered for DRM. The creator of a WMV or WMA file can decide to only let the user play a movie on a single PC after having paid for a licence code. Because WM DRM is only implemented in Windows, it's impossible to play such files on any other platform. The AAC audio files from the iTunes music store also originally had a layer of DRM added to them until the introduction of iTunes Plus, although the restrictions were not that strict.

It is clear that from a user's point of view, DRM is totally undesirable and it seems most companies have finally started to understand this. If one simply makes it easy enough for customers to stream media at a reasonable cost, then it no longer makes sense to keep investing in increasingly watertight anti-theft technology to prevent copying of the stream at all costs. It suffices to make the security good enough that the subjective cost of capturing and illegally redistributing the stream is considerably higher than the actual cost of getting the stream flowing legally in an easy-to-use interface on any device of choice. This is one of the reasons behind the success of companies like Netflix.

History: why certain formats were hated by certain people

QuickTime

On the Windows platform and also to a lesser degree on Linux, QuickTime was rather unpopular for different reasons. Of course one of the reasons is that Windows Media is bundled with Windows, and QuickTime is not. That's not something Apple can do much about. However, Apple itself has also been making a few stupid mistakes. First of all, QuickTime on Windows became notorious for bad stability. Of course it would have been easy for Microsoft to make QuickTime crash on random occasions(1b). But even if we put all paranoia aside and look at how stable current versions are, there are still some major issues.

Since version 3 of QuickTime, there were two variants that reek of involvement from an overly active marketing team: standard QuickTime and QuickTime Pro. The first only allows to play movies, and you need to pay for the latter. However, Apple was stupid enough to include a nagging screen in the standard version which always popped up when opening the QT Player, until you upgraded to QuickTime Pro. That's one hell of a way to annoy people! Luckily the pop-ups were removed in newer versions, but they are still burned into peoples' collective memory. Worse however, is that for a very long time full screen playback was restricted to the Pro version only. Whoever came up with that idea should be fired for obviously having no clue about how annoying the lack of full screen is. All other free players have it! Luckily someone saw the light and since version 7.2, full screen is again a feature of the standard QuickTime player. The browser plug-in still lacked full screen though, but luckily browser plug-ins have gone the way of the dodo.

The grudge of Linux users against QuickTime stems—next to the fact that there has never been an official QuickTime for Linux—mostly from the proprietary codecs like Sorenson and the QDesign Music codec. These were used in many movies created with QT 3 up to QT 6. Actually that's also the only valid argument against QuickTime, because movies with non-proprietary codecs can be played fine. The specification of the QuickTime format itself is openly available, and there are open source projects with libraries for handling the format. Moreover, the use of proprietary codecs is decreasing in favour of the more standard MPEG-4 and AAC.

Windows Media

Of course on the Mac and Linux platforms the roles were reversed, and the WMV format was pretty unpopular there to say the least. Despite what the average Windows user may think, WMV is a lot worse than QuickTime once it leaves its native environment. With all essential documentation publicly available, one can write a working QuickTime player from scratch. Of course it will only be able to play movies with non-proprietary codecs, but it will be able to play many movies. Trying to write a WMV player from scratch didn't even get you anywhere, because both the format and all codecs were proprietary. The result is that for a very long time, there was no decent way to play WMV movies in Mac OS or Linux. When Microsoft finally released the first Windows Media Player for Mac OS, it was so bad that it almost seemed intentional(1c). Things got a lot better when Flip4Mac introduced a plug-in that enabled QuickTime to import, play and even convert WMV files as if they were QuickTime movies. However, movies with “advanced” (read: annoying) features like DRM were still impossible to play.

In Linux running on an x86 machine, it is possible to tap into Windows library files to decode WMV. So, on that platform it wasn't too bad, legal issues put aside. However, with the growing popularity of 64-bit machines and the lack of a 64-bit WMV library, it again became apparent that this was just a lucky coincidence. On those machines only the variants of WMV that have been reverse engineered could be played until the advent of a 64-bit WMV implementation. Of course playing files with DRM is—and probably will remain—impossible on any Linux machine until someone hacks it. Luckily the whole relevance of the Windows Media format and codec is rapidly dwindling.

(1a, 1b, 1c): If you want to know more about the entire complicated media format war between Microsoft and its competitors, this article explains it in detail.