Just how useful is UHDTV, aka 4K or 8K?

The subject of this article is image resolution and the new Ultra High Definition Television or UHDTV standard, which includes the 4K format, also known as 2160p, as well as 8K or 4320p, which again doubles the resolution compared to 4K. Some people seem to believe this is a logical evolution in digital video and every consumer will happily spend money on upgrading their Full-HD televisions to buy an Ultra-HD set. I have already mentioned the trend of increasing video resolutions in “Why ‘3D’ Will Fail… Again” and if you have read that article, you can already guess where this one will be headed. A test is provided that allows you to see if you would be able to discern any extra detail in an UHD image compared to a Full-HD image.

The low-down of this article is that for consumers in practical household situations, watching video on a UHD (2160p) set-up will not yield any visible image sharpness improvement over a Full-HD (1080p) set-up. One needs something resembling a home cinema to truly benefit from the higher resolution. Obviously, given the even higher resolution of 8K, its usefulness is even more limited, in practice its only sensible applications are 360° VR or dome projections.

One benefit of the UHDTV standard, even when spectators cannot notice the increased image resolution, is its extended BT.2020 colour space. This improvement will however be hard to notice except in a side-by-side comparison with the previous BT.709 standard.

For content creators, UHD is useful because the higher resolution offers more freedom in post-processing, and Full-HD material created from 4K or 8K sources will look better than if it had been filmed directly in 1080p.

In general, I am quite certain the general public will not care about UHD beyond the bragging rights of keeping up with the latest trends and highest numbers on spec sheets.

The gist of this article was also explained by the former vice president of R&D at RCA in a nice three-minute video. Unfortunately the website that hosted it is now gone and even if the video is still online, it is impossible to find. The only thing I can give you is the former URL, which does contain a very concise summary of both that video and this article: www.gravlab.com/2014/01/02/4k-super-hd-tv-utterly-useless-unless-sit-much-closer.

- What is 4K and 8K?

- “Videophiles”: will 4K and 8K be the new SACD?

- 1080p is plenty for most consumers

- Do Your Own Visual Acuity Test

- How the Test Works

- Screen Size vs. Viewing Distance Calculator

- Screen Size vs. Viewing Distance Calculator (Simplified)

- Practical Examples

- The Big Stick: Colour Space

- Conclusion

What is 4K and 8K?

A digital image (or video frame) is represented by a grid of pixels, and the resolution is the number of pixels in a given direction. In theory, an image with a higher resolution has the potential to appear sharper. The word ‘resolution’ can be used in absolute or relative terms. An absolute resolution specifies the number of pixels per unit of length. The resolution of printers for instance is always specified in absolute terms, for instance “600 dpi” means the printer can plot 600 distinctive dots over a distance of one inch. A relative resolution only specifies the number of pixels, for instance an 1080p image has (at most) 1080 pixels in the vertical direction. Relative resolutions are most commonly used when it is not known beforehand at what exact size the image will be displayed, as is the case for digital cinema. Even though a computer display and even the sensor in a digital camera do have fixed sizes, their resolution is almost always specified in relative terms.

Mind that in principle, a different absolute resolution can be used in the horizontal and vertical directions. This means pixels would not be square but rectangular. For the oldest digital video standards this was the case, but from 720p on the pixels have always been square.

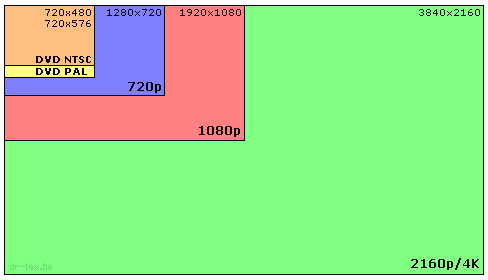

The whole point of 4K and 8K is to increase the resolution compared to existing standards. To add some extra confusion to the already dodgy video resolutions naming scheme, ‘4K’ and ‘8K’ refer to the horizontal number of pixels in a typical 2160p or 4320p image, while the latter numbers actually refer to the maximum number of vertical lines in the image. Compared to the now ubiquitous “Full HD” or 1080p standard, 4K offers twice the linear resolution, or 4 times as many pixels. A single 4K film frame contains about 8 megapixels. And then there is also 8K, which is this resolution again doubled, hence 16 times as many pixels as 1080p, or about 33 megapixels.

As if the naming is not confusing enough, there are actually two different major 4K resolutions: one which has 3840 horizontal pixels (typically called UHD, meant for consumer applications), and one with 4096 horizontal pixels (DCI 4K, meant for cinema productions). In the rest of this article, I won't distinguish between these two due to the minor difference.

The major question is: how useful is 4K? Does the average consumer want it? For whom is it useful? Is it warranted to do costly upgrades of equipment everywhere to push about 200 million pixels per second to consumers? And what about 8K? If you have read my 3D article, you can already guess my answer to these questions.

“Videophiles”: will 4K and 8K be the new SACD?

Chances are you have no idea what SACD is. It stands for Super Audio Compact Disk, and was at one time touted to be the successor to the regular audio CD. It features a higher sampling rate and bitstream technology that equates to a higher resolution than the standard 44.1 kHz 16-bit audio. From a purely objective point-of-view, it offers better sound quality. The format was loved by audiophiles, the kind of people who claim to be able to hear a difference when loudspeaker cables are elevated by little porcelain stands as opposed to simply lying on the floor.

The average customer however was unwilling to pay a premium price for this new format that was incompatible with standard CD players and that could not be ripped to iTunes. Worst of all, it offered no noticeable improvement. People were perfectly content with their ordinary CDs. Heck, people had been quite content with vinyl records for many decades and now they are perfectly happy with MP3 and AAC. From an objective point-of-view, quality-wise those lossy compression formats are much worse than CD audio. It is only because they exploit weaknesses of the human auditory system that they give a subjective impression of being of equal quality.

I assure you, under realistic circumstances the average consumer is unable to readily tell the difference between regular CD audio and formats with a higher bit-depth or sampling rate like SACD, if they are mastered from exactly the same original waveform and played back at exactly the same comfortable loudness. The latter is absolutely crucial: those who believe to have heard a difference during a demonstration, have been fooled by a sneaky salesman who ensured to play the more expensive sound system at a higher volume, which will always make it sound ‘better’ unless it has some truly obvious problem. Only when the volume is cranked up to levels that cause hearing damage, a perceivable difference may become apparent. Therefore it is not surprising that SACD ended up a huge flop that soon vanished into near oblivion.

My whole point of this article is that as far as consumers are concerned, I believe UHDTV will go the same path as SACD. Aside from the professionals and content creators for whom this high resolution is truly useful, only a small group of consumers who could be dubbed ‘videophiles’ will care about the format, and in most cases they will only believe to see an improvement in quality because they want to, or because a salesman has used a trick like boosting the brightness of the more expensive TV.

I have two arguments for this claim, that will be explained in the next sections.

1080p is plenty for most consumers

My first argument is that there are surprisingly many people who do not even care about the 1080p HD standard. They are content with 720p or even standard definition as offered by DVD. I do not have any data to back up this claim, but wherever I can find an overview of the number of downloads of a video file offered in both SD (standard definition), 720p, and 1080p, the SD version gets by far the most hits, followed by 720p, and 1080p lags far behind.

For the second argument I do have some scientific data to back it up. As a matter of fact we will be collecting that data here and now, tailored to your specific situation. This data will prove that just as with the CD versus SACD, it will be impossible to notice any improvement over 1080p except in situations that are unattainable or unpractical for the majority of consumers. It will be necessary to display the image at unrealistically large sizes, and a lot of source material will not even contain the degree of detail the format can represent.

Before moving on to the visual acuity test, here is a small but telling illustration. The following images show the same fragment of a digital photo, down-sampled and then up-sampled again to simulate the case where different consumer media are created from a 4K master, which are then projected at the same scale. The rescaling was performed using Lanczos resampling.

As you can see, there is definitely a loss of sharpness between 2160p and 1080p, but it is not as dramatic as one would expect from halving the resolution. Now suppose that the original image was 1080p and we would reduce it to 720p. This amounts to a reduction in resolution to 67%. We get this:

You need to look really closely here to see any difference at all. Yet, in the previous examples there was a clear difference between 1080p and 720p. What is going on here? The answer is that in the raw output taken directly from this camera, there simply is nothing anywhere near the maximal sharpness the resolution could theoretically offer. There are various reasons for this which I will not explain here. Fact is that almost no camera except perhaps very high-end ones will be able to resolve image details that are as fine as one would expect from its resolution. The true limit lies a good notch lower, in this case the camera only seems able to record details up to about 67% of the theoretical maximum spatial frequency. The fact that I used a high-quality resampling algorithm here is important: if I would have used simple bilinear scaling, the difference could be more visible due to scaling artefacts.

What this does illustrate is that even though 4K proves overkill for the average consumer, they will still reap benefits from its existence even on a 1080p display device. The mere fact that content creators will be using 4K, will improve the quality of 1080p down-sampled material as well. A 1080p BluRay created from 4K source material has the potential to look sharper than if it would have been filmed directly in 1080p.

Do Your Own Visual Acuity Test

We do not need a 4K display to demonstrate how useful 4K might be to you personally. All we need is a measurement of your personal degree of visual acuity, or the limit on the ability of your eyes to resolve image details. Once we know this limit, we can determine at what size an image of any resolution should be projected to stay below this limit. Obviously if the degree of detail offered by 1080p already matches or exceeds your limit, then an upgrade to 4K will be pointless.

If you know how well you score on an acuity test (“20/x” score), or you do not care about perfect accuracy and have a vague idea whether your eyesight is normal, below, or above average, then you can go straight to the simplified screen size vs. distance calculator. If you want to use the full calculator instead, you can use one of the following values for α:

- α = 0.5 if you have extremely sharp eyesight,

- α = 1.0 if you have normal eyesight,

- α = 1.7 if you have unsharp vision.

Otherwise, I provide a test right here that allows to measure your acuity with fairly good accuracy. The nice thing about this test is that you can perform it on the very equipment that you will be using to watch movies, if you hook up your computer to e.g. your projector. This allows to really determine what degree of detail you can discern in the most ideal case on that equipment, and whether it makes any sense to upgrade it to ultra-HD.

Follow this link to perform your visual acuity test.

When you have successfully performed the test, you will have two numbers W and D that you can enter in the calculator below.

How the Test Works

Why is the acuity test I provide on this website valid? It relies on a pattern of one-pixel-wide black-white alternating stripes. This is the highest possible spatial frequency that your display or projector can represent according to the Nyquist-Shannon sampling theorem, at the highest possible contrast. This makes it the limit signal for determining visual acuity. The point where you can no longer discern between this signal and a solid grey area, is the limit of your visual acuity. Any realistic image will contain details that will be at most as sharp as this, therefore if your eyes can no longer resolve the detail in these test images, they certainly will not notice any increase in detail in an average film frame displayed on the same screen.

Given that there is an upper limit on the viewing distance, there must also be a lower limit. This lower limit would be the distance where you become able to discern the size of individual pixels, as opposed to seeing them as the smallest dots your eyes can resolve. This requires your eyes to be able to resolve the individual edges of each pixel. This becomes possible at distances below 50% of the limit distance. This means that you do not need to sit exactly at the acuity distance to have full benefit of the maximum resolution. Anywhere between that distance and half of it, is good enough. For instance at 60% of this distance as shown in the above figure, you will resolve every single pixel without overlap, while the image will appear larger than at the maximum distance. For this reason, the 60% point is used in the calculators below to determine the ‘optimal’ distance.

Screen Size vs. Viewing Distance Calculator

This one is for the techies. For most people, the simplified calculator will be more practical.

The calculator outputs two distance values for 4K and other common video resolutions, expressed in multiples of the screen width: the viewing distance where you cease to be able to discern all its details, and an optimal distance where you should see all details. The equation we need for this is Dmax = 1/(pixH⋅tan(α)), where pixH is the number of pixels in the horizontal direction of the image. I experimentally determined that the ‘optimal’ distance is about 60% of the limit distance. If you do not want to take my word for it, just repeat the test but this time, measure D as the largest distance where you are 100% confident that you can see the difference between the vertical and horizontal lines anywhere in the image (not just around the dividing line where the difference is easiest to see).

For each of the frame sizes, you can also compute the optimal width of the screen given your acuity limit and the viewing distance, or the optimal viewing distance given the screen width. The values given here include the 60% factor to ensure you will not miss any detail.

Finally, you may also compute the maximal useful (horizontal) image resolution given your acuity limit, the size W₀ at which you could project the image, and the distance D₀ from which you would like to view it. Enter the desired values and make sure the correct value for α is still filled in, and click the last ‘Compute’ button. The equation in this case is: pixH = W/(D⋅tan(α)).

Screen Size vs. Viewing Distance Calculator (Simplified)

The two results shown in the format recommendation are to be interpreted as follows: the “optimal” format is the one that will let you see all the details in the image without discerning individual pixels. If the “no point above” format is larger, it will only in theory be able to show more detail. In practice you will have a very hard time telling the difference and you will likely miss some of the details, so it is up to you to decide whether to invest in it. Especially if this drops from e.g. 4K to 1080p when slightly increasing the distance, you can be pretty certain that you will never see any difference between 1080p and 4K in that situation.

Practical Examples

Armed with my acuity test and calculators, it is time for some real-world examples. I measured my own acuity limit to be 0.60 arc minutes, which is quite a bit better than the average of one arc minute reported on Wikipedia. This agrees with ‘official’ eye tests as well that give me a better than average score.

Suppose I have a 42" 1080p television. This means a screen width of 36.6". At what distance would I need to view it to see all details comfortably? The calculator says: 65.5 inches, or 166 cm. That is already closer than the distance I would spontaneously use. Imagine I would be watching that TV together with three other persons who want to be certain to see all pixels. Things would get very cramped, especially if those other persons would have eyes more average than mine and would therefore need to sit even closer.

Now if that same television would be 4K, the recommended distance would become 33 inches or 84 cm. Yeah. So let's take the inverse approach and suppose that I would want to sit at least three metres from the screen. How wide does my 4K TV or projection need to be? That's 3.4 metres, and 1.9 metres high for a 16:9 aspect ratio. I would need to clear a lot of stuff to get an empty white wall to project an image of that size. I can go on like this, but all examples would show that 1080p is already at the limit of what is practical unless I could convert a large room in my house into a dedicated cinema. And remember, my eyes are much better than average. Someone with standard 20/20 sight would need a screen more than 5 metres wide in this case.

What about a 4K smartphone? Assuming a screen width of 12 cm, which for me is the upper limit for a practical phone, the optimal viewing distance to have benefit of a 4K display would be about 7 cm for normal vision (with my sharp eyesight, I could do with 10 cm). Right. The only visible effect such display would have, is shorter battery life due to all the extra processing required to drive all those pixels and haul huge video streams across networks.

To add insult to injury, the visual acuity limit measured here is only valid in a tiny area around the spot where you are focusing. The resolution of your eyes drops quickly outside the fovea (you can verify this by repeating the acuity test while focusing on a spot next to the actual text area). Therefore unless you would be frantically scanning around the image all the time, you are going to miss most of that high resolution anyway. The point of using such high resolutions is to allow the spectator to focus on anything and ensuring it will be sharp.

Regarding field-of-view, playing around with the calculators will reveal that for someone with normal vision watching from the recommended distance, 1080p offers a horizontal FOV of 50° while 4K offers 86° and 8K offers 124°. For a cinema image, there is little point in projecting outside the area that is covered by both eyes, which is about 120° of the human horizontal FOV. This means that 8K could still be useful to offer the sharpest discernible resolution inside this entire area. However, playing around with the calculator will also reveal that any comfortable viewing setup for an 8K display in that situation needs to be insanely large. Trying to give a spectator a 120° FOV with planar projection technology is way beyond the limits of the practical.

The Big Stick: Colour Space

Perhaps the entertainment industry has been well aware of the fact that increased resolution alone does not make it easy to convince the average consumer of buying new equipment. Even when setting up demos with huge screens, the difference will seldomly offer a true ‘wow’ factor, and pesky spectators having common sense will object that they simply do not have room for such large display. It may be for this reason that the 4K format is tied to a new and improved colour space. Most likely however, it is just a matter of technological progress.

The ultra-HD format is defined by the BT.2020 standard which is tied to a different colour space than the BT.709 standard that governs Full-HD. The BT.2020 colour space is able to represent a much wider range of colours (at the cost of requiring more bits per pixel to maintain at least the same colour resolution as in BT.709). What this means in practice, is that an ultra-HD television is able to display a wider colour range than a Full-HD television—in theory.

In my opinion this is a much more worthwhile reason to upgrade equipment than the increased resolution. When viewing material recorded with the full BT.2020 gamut side-by-side on two equal-size TVs, one of which is ultra-HD with full colour space capability, and the other Full-HD with colours clipped to the BT.709 space, the image will look visibly more vibrant on the ultra-HD screen even when standing too far away to discern the higher resolution. This has a much better chance of offering a true ‘wow’ factor than merely higher resolution.

This has to be taken with a pinch of salt however, because the BT.709 colour space is pretty capable of representing everyday video content with satisfactory fidelity, and our eyes are good at compensating for the limitations of the colour space. The improvement is only truly obvious in a side-by-side comparison.

Of course there is no technical reason whatsoever why a display would need to have full 4K resolution to be able to display the BT.2020 colour space. Neither is there any reason why a 1080p resolution movie couldn't be stored using this colour space. The industry will however likely want to enforce that any recording using the BT.2020 colour space must also have at least UHD resolution, for reasons that are twofold. First, guaranteeing that anything related to the UHD brand will actually have ‘ultra HD’ resolution. Nobody having invested in a premium UHD display should run the risk of not always getting all the promised pixels! Second, forcing consumers wanting to benefit from the better colours to buy more expensive high-resolution equipment. I predict however that when the technology to make wide-gamut displays becomes more commonplace, we will see smaller displays being sold by ‘non-premium’ brands, with only 1080p or even 720p resolution but still capable of reproducing the entire gamut of BT.2020 colours. They will simply downscale any higher-resolution input to their own lower resolution. At the time of this writing this is already happening with certain smartphones. From there on, it is only a small step up to affordable—and practical—television sets that do not take up an entire wall but are able to display more vibrant colours.

Conclusion

I can keep the conclusion short: a 4K screen is only useful either if it is huge, or one sits very close to it. In typical setups with reasonably-sized television screens and comfortable viewing distances, increasing the resolution from 1080p to 4K brings no visible improvement. The chances are slim that the average consumer will have any immediate benefit from upgrading their home consumer equipment to 4K. The format is useful for specific applications like in medical contexts and for content creators, and the mere fact that the latter will be using it will also have a positive impact on the quality of 1080p material. But the average public that just wants to watch a movie will not care about it, and shouldn't. If the appreciation of a certain movie depends on it being projected in 4K or 8K, this movie surely cannot be more than a gimmick not worth watching more than once. Only a small group of enthusiasts will be willing to pay a premium price for a 4K projector and convert one of the rooms in their house into a cinema, which is pretty much mandatory to get any benefits from the format without having to sit uncomfortably close to a smaller screen.

Only for the potentially improved colour reproduction that is tied to the UHD standard, it could be worthwhile to upgrade. It will take a while however before the majority of movie material, playback devices, and displays will support the improved BT.2020 colour space. If any component in this chain supports less than the entire space, then it will be this lowest quality that reaches your eyes. Until this full colour space chain becomes commonplace, there is no point at all in upgrading anything if you have no need for merely a higher resolution.

These conclusions about 4K make it easy to also draw a conclusion about the 8K format that is also supposed to be aimed at consumer televisions if I have to believe Wikipedia at the time of this writing. Because the name again refers to the horizontal resolution, 8K has four times the number of pixels as 4K: about 33 megapixels. Given that the number of situations where 4K is useful is already small, the usefulness of 8K for a planar display is downright marginal.

There are applications where even this insane resolution may be useful, for instance 360° VR video or dome projections, that need to cover a larger total area. (For a 360° video to be viewed at life-like acuity, 8K isn't even enough.) However, VR video is a gimmick that is fun for a short while and fatiguing and annoying in the long term, and (just like 3D) not necessary or even desirable for normal movies or TV shows, and is only really practical with VR goggles. Given that dedicating at least half a room to act as a home cinema is already a stretch for many, it is just ludicrous to expect the average household to turn an entire room of their house or apartment into a dome projection theatre for which only a very limited amount of video material is available for it to be used to its full potential.

This is not the first time digital image resolutions have spiralled out of control. The pointless megapixel race for digital still cameras has been going on for years and has resulted in horribly noisy sensors that need a thick fat layer of denoising algorithms to produce output that does not look entirely ridiculous, and is often worse than what can be obtained with a lower resolution sensor that requires far less post-processing. It seems humanity likes to bump its head against the same stone over and over again.